Exploring the Potential of Neuromorphic Computing

Neuromorphic computing holds great promise for a wide range of applications across various fields. One notable application is in the realm of artificial intelligence and machine learning. The brain-inspired architecture of neuromorphic systems allows for efficient processing of complex neural networks, enabling advancements in areas such as facial recognition, natural language processing, and pattern recognition.

Moreover, neuromorphic computing shows potential in the field of robotics. The ability of neuromorphic systems to mimic the neural structure of the human brain makes them well-suited for controlling autonomous robots and enabling them to navigate their environment more effectively. By processing sensory data in real-time and adapting to changing conditions, neuromorphic computing can enhance the capabilities of robotic systems in tasks such as object manipulation, spatial awareness, and decision-making.

Current Challenges in Neuromorphic Computing Research

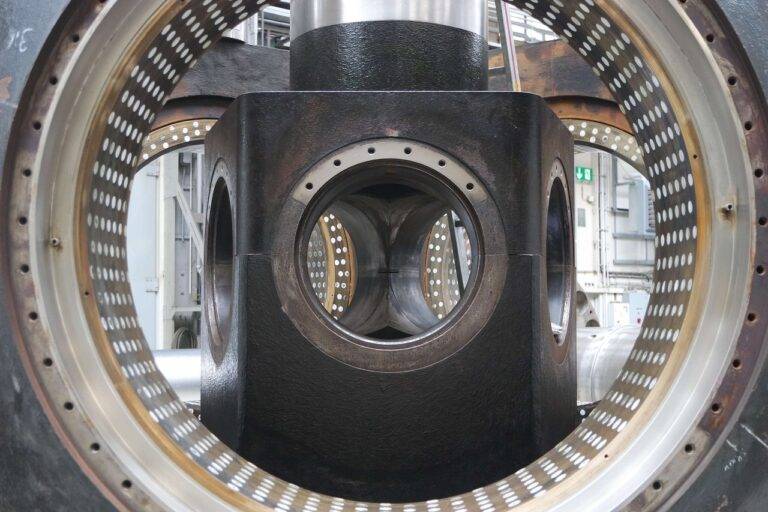

Neuromorphic computing has emerged as a promising field with potential applications across various domains. However, several challenges impede its widespread adoption and advancement. One key challenge is the development of efficient hardware architectures that can accurately mimic the complex and dynamic neural processes of the human brain. Current neuromorphic computing systems often struggle to achieve the level of computational power and energy efficiency necessary to fully realize their potential.

Another significant challenge in neuromorphic computing research revolves around software development. Designing algorithms that can effectively utilize the unique capabilities of neuromorphic hardware remains a major obstacle. Adapting existing algorithms from traditional computing paradigms to suit the parallel and distributed nature of neuromorphic systems is a complex task that requires innovative solutions. Additionally, there is a need for standardized programming frameworks and tools that can simplify the development process and enable researchers to harness the full capabilities of neuromorphic hardware.

Advantages of Neuromorphic Computing over Traditional Computing

Neuromorphic computing holds several advantages over traditional computing methods. One key benefit is its ability to mimic the way the human brain processes information, allowing for more efficient and complex problem-solving capabilities. This neuromorphic approach can lead to improved performance in tasks such as pattern recognition, machine learning, and artificial intelligence.

Additionally, neuromorphic computing systems have the potential for lower power consumption compared to traditional computers. By adopting a model that mirrors the brain’s neural network architecture, these systems can operate with increased energy efficiency, offering a promising solution for tackling the growing demand for sustainable computing technologies.

• Neuromorphic computing mimics the human brain’s information processing

• Allows for more efficient and complex problem-solving capabilities

• Improved performance in tasks such as pattern recognition, machine learning, and artificial intelligence

• Potential for lower power consumption compared to traditional computers

• Operates with increased energy efficiency by mirroring neural network architecture of the brain

• Promising solution for sustainable computing technologies

What are some potential applications of neuromorphic computing?

Some potential applications of neuromorphic computing include robotics, autonomous vehicles, image and speech recognition, and healthcare diagnostics.

What are some current challenges in neuromorphic computing research?

Some current challenges in neuromorphic computing research include scalability, power efficiency, and programming complexity.

What are the advantages of neuromorphic computing over traditional computing?

The advantages of neuromorphic computing over traditional computing include higher energy efficiency, faster processing speeds, and the ability to mimic the parallel processing capabilities of the human brain.